They say necessity is the mother of invention. When Covid hit and the entire post industry went remote, colorists and editors were scrambling to provide their clients with safe solutions which met some basic but important requirements for creative intent. There are companies on the block like Streambox which are already providing high quality WAN-based solutions for remote viewing. However, the initial buy-in was expensive and the monthly subscription was high. You also had to install a box on both ends for point to point streaming. I had some clients with a tight budget that didn’t want to deal with the box and pricing so we just went with Zoom. The Zoom image is TERRIBLE and not usable at all for color observation. Covid was also a strange time when clients didn’t want anyone coming into their home or office to do an installation.

After a couple of weeks of research, I discovered that there just isn’t a middle solution. Zoom was affordable but shite and Streambox was pricey. I decided to create an ad-hoc solution for remote color sessions and was pleasantly surprised at the quality. If you’ve ever used Twitch to watch live gaming, you’ve probably noticed the impressive image fidelity. Twitch offers high quality at high frame rates with low latency. Why is no one using this platform for remote color sessions? I just needed to validate the entire signal path for color accuracy using quantitative methods from end to end.

There is a small startup making genius apps for colorists called Time In Pixels. They created the False Colors app as well as the super popular OmniScope plugin for Resolve. They also make a clever program called Nobe Display. The program comes with a Resolve plugin which siphons off an NDI signal from Resolve’s color managed output and feeds it into your network. NDI is a new video transmission standard like SDI or HDMI but for a LAN. It’s designed as a simple and lightweight IP workflow ecosystem. It’s a lot like SMPTE 2110 in some high level ways but not as heavy duty. It was intended to live within a LAN rather than transmitted over custom high bandwidth WAN. Live streaming studios/events, churches, court houses, city councils, etc have been built around IP-based video transmission and file sharing so NDI is an exciting new standard for these markets. You can read more about this revolutionary technology on NewTek’s website. Most of their applications are available for free and the technology is an open standard. The reason I fell in love with NDI is because it was so easy to setup and transmit over my own office network. Once I ran the NOBE Display app and turned on the NDI transmission, I could access the live video feed from anywhere in my home and office using another device with a free NDI monitoring app. The video just appears over the network. Amazing!

OBS Studio has an option to receive this NDI feed as a separate input. Then you can setup the custom broadcast settings for the signal and send the live stream to the internet. Of course, nothing is quite that simple when it comes to color management. Initially, I wanted to keep my workflow entirely free (save for buying the Nobe Display plugin for $75.99 US). Although OBS Studio is free, there were some specific limitations regarding signal encoding which I found did not meet my standards. That’s when I discovered Louper. They’re a sexy startup which reminds me of the early days of Frame.io. They’re basically Twitch but with a client-facing portal and the ability to host webcams from clients as well as a live chat window optimized for post production. So now, I have a high quality stream going live to WAN with configurable and testable signal which is hosted within a professional client-friendly platform. I’m getting less than three seconds latency (I’m based in Brooklyn and I have Verizon FIOS with 1GB up/down, however I had similar latency over Spectrum in Venice, CA within a 35mbps up/down pipe). I don’t have to use a separate machine for the broadcast. I’m using the same machine that I’m coloring with. I use a hardline Ethernet cable direct to my FIOS router. The results are blowing my mind. I’m only paying a few bucks a month for Louper subscription.

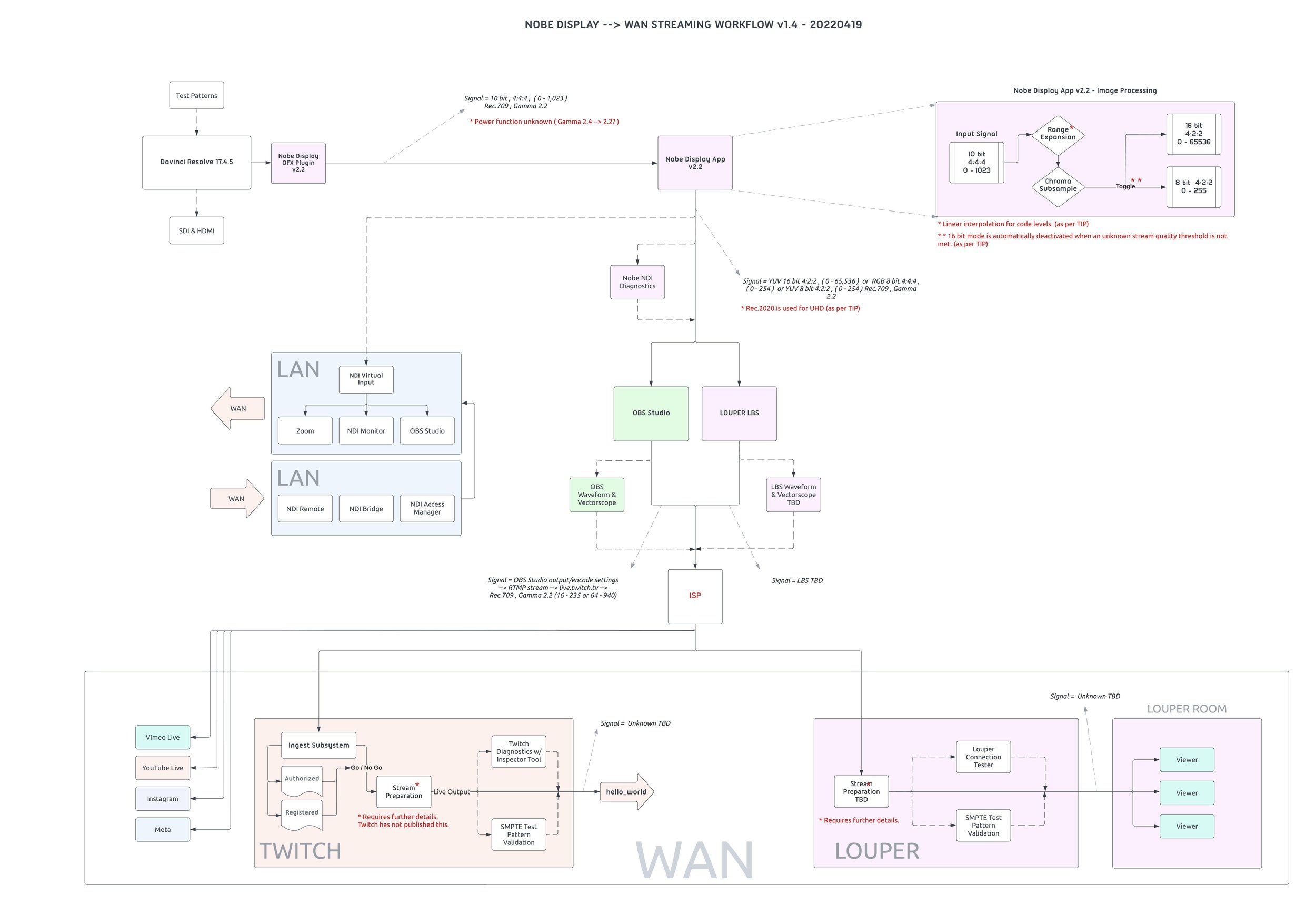

I’ve created a diagram below to show my workflow. Note that the signal can be probed at pretty much any point along the path for validation of accuracy. I have yet to verify with Louper what exactly is happening with the signal within their own black box, however, they have been very communicative with me and are excited about the prospect of this becoming another use case for their product. Time in Pixels has also been very helpful, providing technical details and feedback in my workflow design.