A Case Study in Superior Deinterlacing and Upscaling Using Machine Learning Tools

As a colorist and restoration geek with a background in developing and working with A.I. tools, I’ve been searching for novel ways to adapt this growing field of research into useful tools which I can use to breathe new life into archival media. This article aims to provide documentary filmmakers with a renewed optimism in the potential of outdated and poorly captured archival media.

The next time you meet a gamer or someone who mines bitcoin, be sure to thank them for their contribution to the film industry! Over the last ten years, radically efficient manufacturing techniques have given computing technology companies like Nvidia and AMD the ability to significantly increase the computational power of their graphics processing units (GPUs). As the cost of computation decreases, the number of calculations per second that we can achieve on our workstations increases. Video games become more realistic, cryptocurrencies can be mined faster, and GPU intensive plugins/effects within post-production software can be rendered more quickly and with greater precision. Although this has been a consistent trend over the years which shouldn’t surprise any of us, a recent stakeholder in GPU efficiency is beginning to make a name for itself and the potential to disrupt archival restoration is huge. Allow me to introduce you to machine learning.

In the winter of 2017 I co-founded a technology company which utilizes A.I tools like computer vision and machine learning to automate image processing and analysis for customers with large satellite or aerial imagery. We received a large grant from New York State through the Genius New York accelerator to hire a team of computer scientists to train a computer using GPUs to stitch, analyze, and organize tens of thousands of high resolution images. Not unlike teaching a small child, we spent months teaching and grading the computer’s results in order to train a “model” which could present us with a product that met industry standards. At first the models were about as intelligent as a banana. Initial results seemed random and erroneous. After weeks of training, the model finally began to show promise with the results resembling the accuracy of what you might expect from a toddler. Using a type of supervised reinforcement learning, we were able to feed positive results back into the model to “reward” the computer when it made correct choices. Within a few months the model was so accurate it was able to compete and often surpass adult human performance. Machine learning experts sometimes refer to these models as “little robots”. You teach them how to do one thing very well and then you can store that model for later use as part of an algorithm. The downside to this approach is that because it must be supervised by a human being, it is unable to learn at the speed of a machine. The human is the bottleneck in the supervised process. There have since been quantum leaps in machine learning methods which allow for unsupervised learning and are achieving similar results in shorter turnaround times.

Although the model that we created is of course proprietary, machine learning researchers are training computers to solve thousands of problems around the world every day and publishing their code to the web as open source. This in turn provides other researchers and hobbyists to then tinker and innovate to solve other novel challenges. The diversity of these tiny robots is becoming a vast ecosystem that permeates nearly every industry from social media to food safety. Over the last several years we’ve begun to see the film industry incorporate machine learning into post production to support services like transcription, facial/character recognition, streaming video optimization, and even visual effects. The latest version of Davinci Resolve (v17 beta as of this writing) has incorporated multiple A.I. tools into their platform to assist in power window tracking and automated object removal. While these tools are still young, I use them nearly every day in my work to the point that I take the technology for granted!

Typically, filmmakers will use the standard deinterlacing tool in their editing software , however, this article will demonstrate why this approach destroys the image.

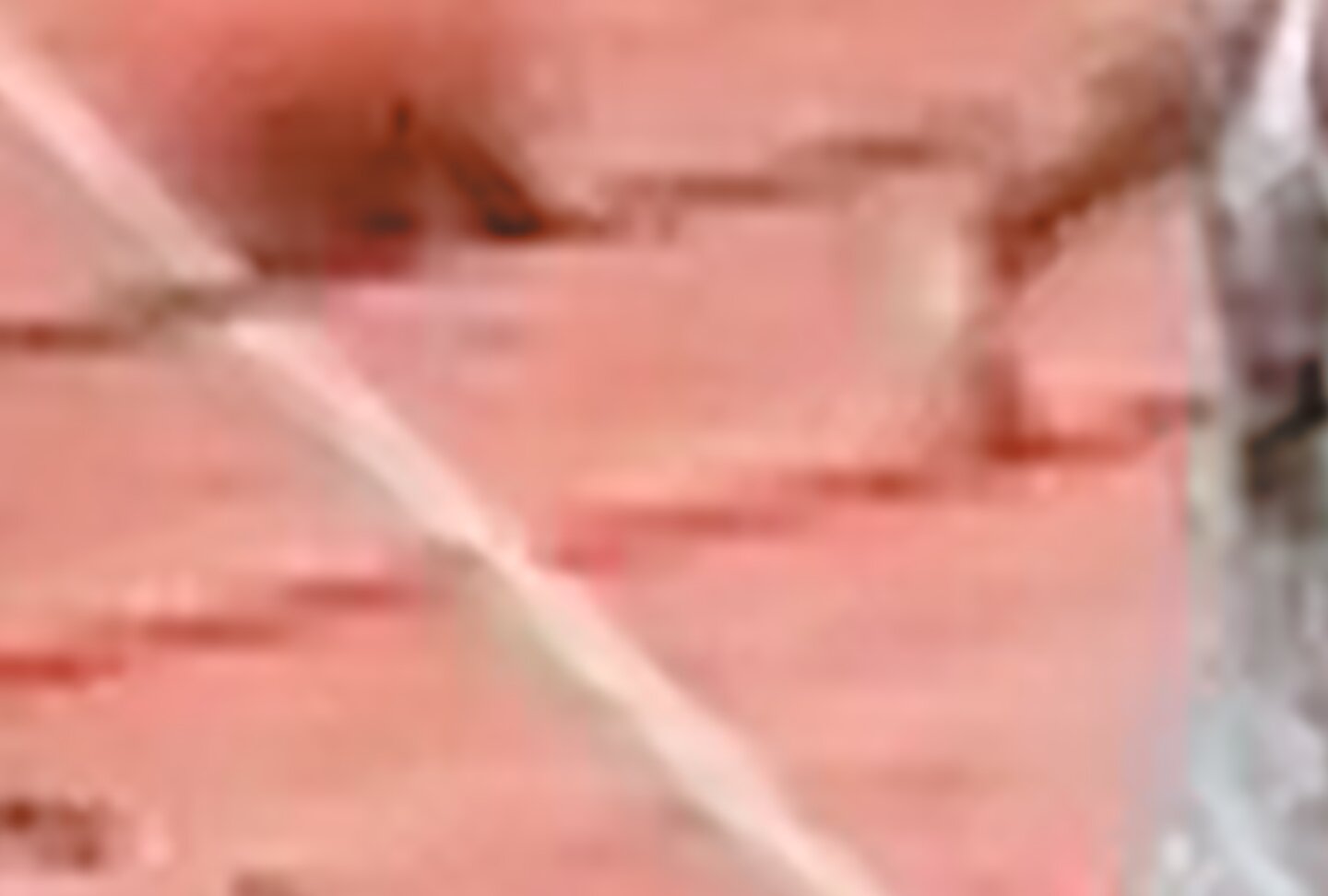

Badly Deinterlaced Source Media

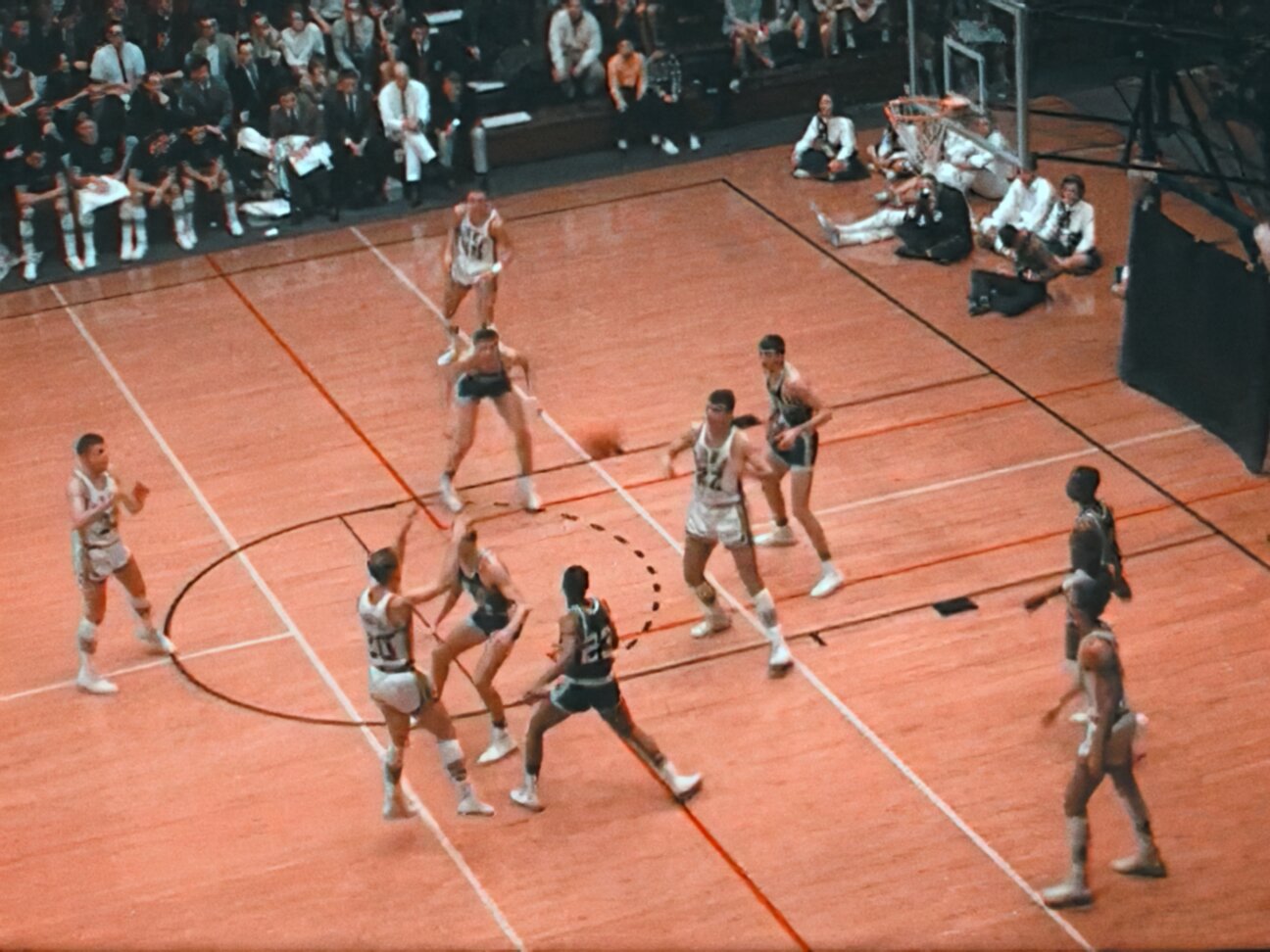

Duke University - Department of Athletics - Duke vs Penn State Basketball 1965

In this case study, I decided to use a worst case scenario in order to exhibit the power of what I believe is the future of interlaced archival restoration. Our source material is a progressive 720x486 MPEG-4 AVC file which was improperly deinterlaced with the interleaving fields burned into a single frame (or instant). This is usually a deal breaker for restoration in that two separate moments in time are displayed simultaneously within a single raster. The goal was to separate the two fields cleanly, interpolate the missing field lines, remove as much spatial and temporal artifacts and noise as possible, and then finally upscale to increase the apparent resolution.

The below images were exported from the original media with both upper and lower fields combined to show the apparent resolution if viewing from an interlaced display. Although the first image in the slideshow below is presented in an interlaced format, I’ve combined the fields in the remaining image for the purpose of detail examination in this case study. It is not possible to exhibit interlaced images from modern digital flat panel displays.

You’ll notice that although the below images are sourced from a non-interlaced render, the lines drawn on the floor of the court are relatively smooth and consistent, albeit, fuzzy from being low resolution.

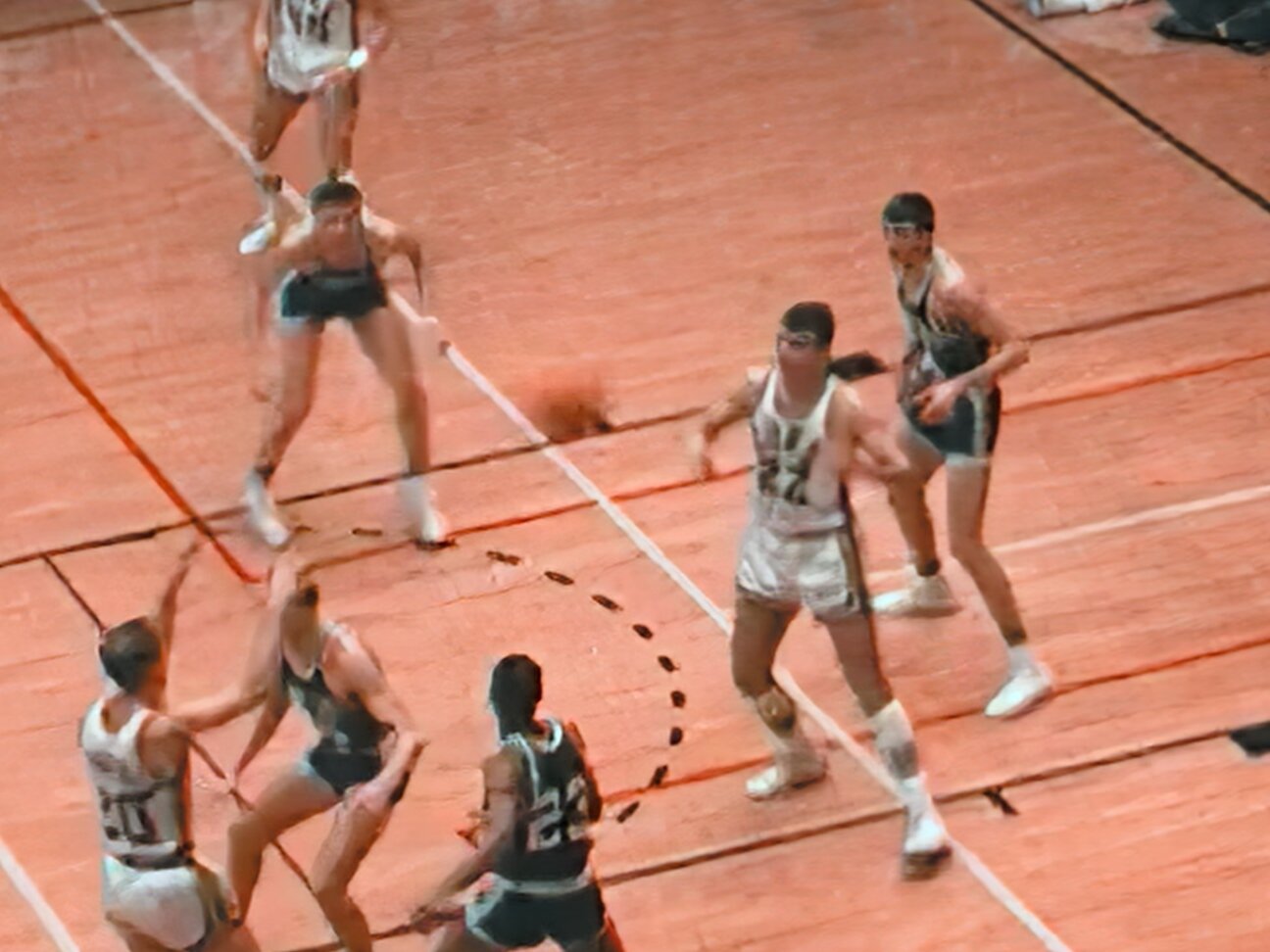

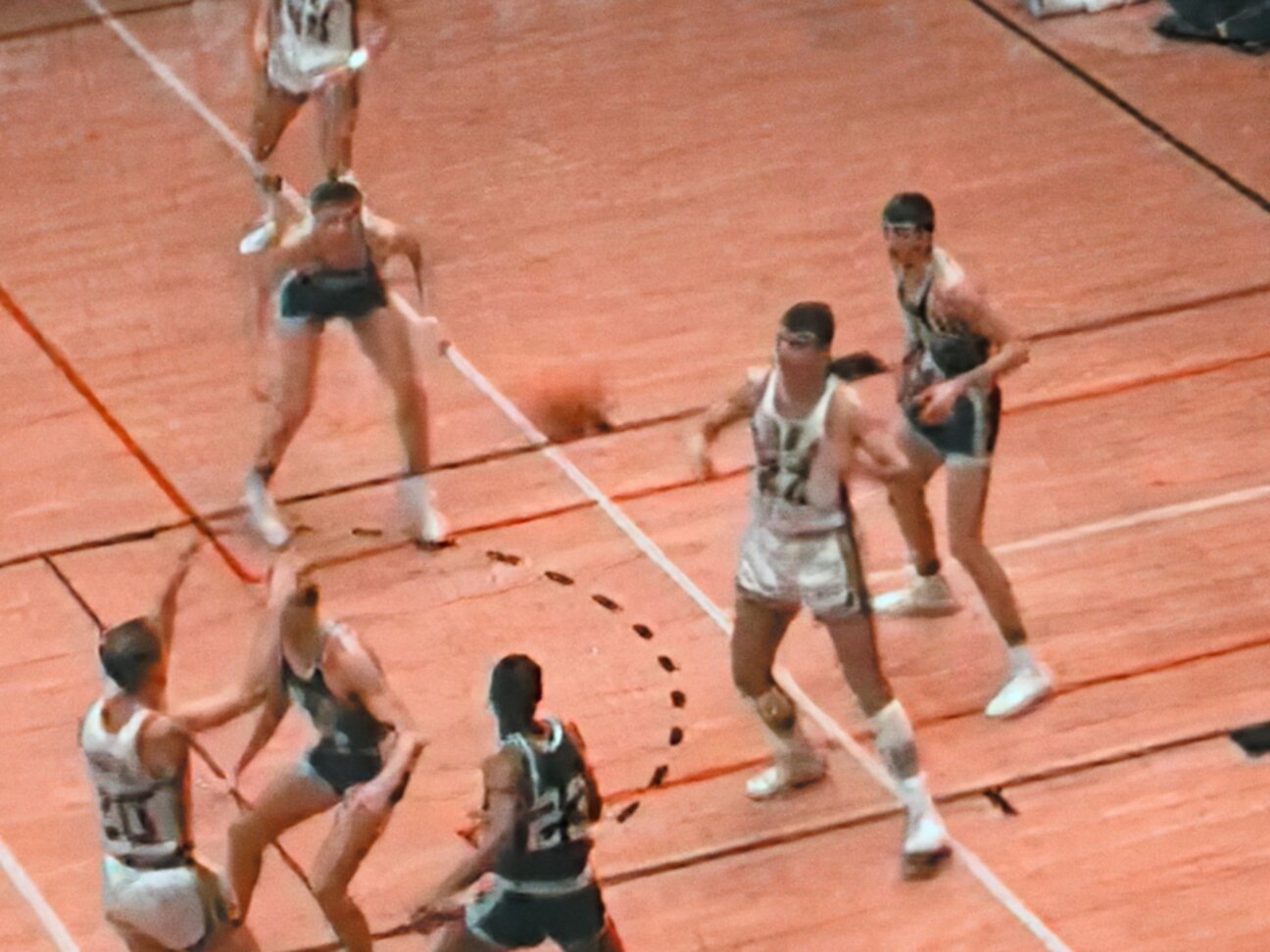

Deinterlacing Using Standard NLE Tools

The below images were deinterlaced using Davinci Resolve using the standard deinterlacing algorithm. I also had very similar results using Premiere Pro’s deinterlacing tool. The primary issue with standard deinterlacing is that they rarely incorporate field interpolation into the process. This means that the interlacer is essentially removing an entire field from the image thus reducing the vertical resolution in half! By removing half of the horizontal fields, an unpleasant stair-stepping results along any line or shape that is not perfectly vertical or horizontal. Notice the black and red free throw lines painted on the floor of the court. They have a noticeable jagged appearance which immediately betrays the loss in horizontal resolution. It is now impossible to upscale this image properly because any artifact that is a result of improper deinterlacing will be sharpened.

Deinterlacing results using the free tool inside DaVinci Resolve

Deinterlacing With Standard NLE With Upscaling

The below images were deinterlaced using Davinci Resolve using the standard deinterlacing algorithm with the additional step of using a bicubic up-sampling filter in order to increase the apparent resolution. Unfortunately, due to the jagged artifacts resulting from the standard NLE deinterlacing method, the upscaling is a hot mess. Some of the compression artifacts are also artificially sharped by the bicupic sampling and thus call attention to themselves with the tell-tale “sparkling”.

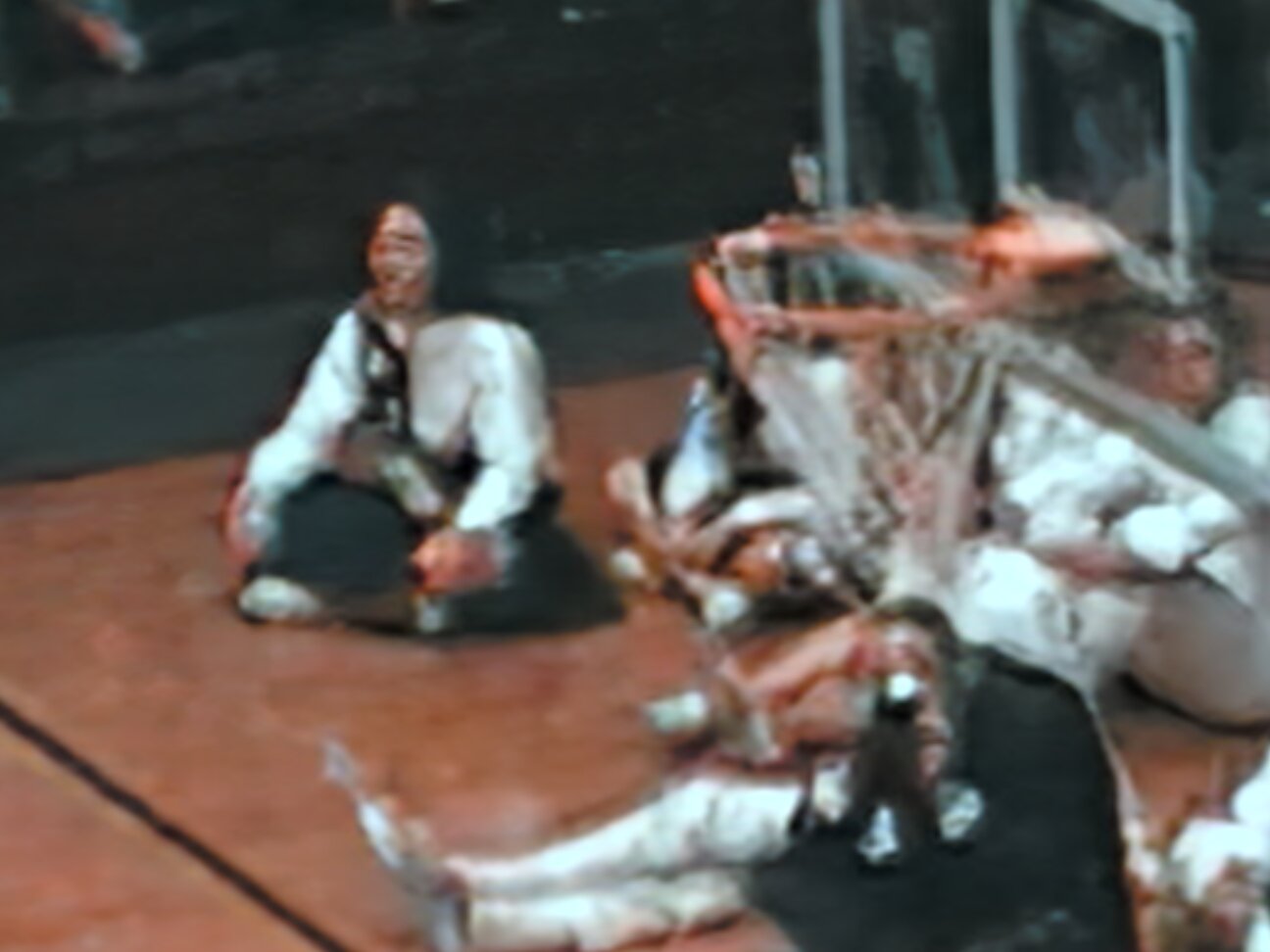

Deinterlacing and Deconvolutional Upscaling Using Machine Learning

The below images were deinterlaced using a more sophisticated process in Vapoursynth which interpolates the missing fields all the while compensating for the motion-induced artifacts. Once a more robust progressive frame is constructed, I was then able to deblock and denoise as well as upscale the image using deconvolution algorithms derivative of a process called machine learning. The resulting image and corresponding video exhibited a significant increase in both apparent and spatial resolution.

Agitation and the Uncanny Valley

Although the fidelity of the above image set has objectively improved, the results have taken a turn toward the uncanny valley. In order to dial back this unnatural effect, I sprinkled some secret sauce into the pipeline in order to agitate the image. The following slideshow is the final output of my process which I believe provides an impressive increase in resolution while remaining faithful to the original image.

Moving Image Result

Below is the first draft of my process in all of its naked splendor in a side by side comparison with the original source video (deinterlaced for fairness). I believe there is still some tweaking to do in order to further reduce the temporal artifacts as well as the uncanny valley, however, we’re certainly making progress!