Abstract: How effective are state-of-the-art AI-based colorization models?

Results: AI-based colorization models are fast becoming successful and it’s only a matter of time before we have models which provide realistic and consistent results. (the DeepRemaster model even colored the pupils of people’s eyes!)

Definition: Although the model I’ve used is called DeepRemaster, this footage has not been “remastered”. Colorization is a “revision” of the media and does not reflect the creative intent of the filmmaker. I’ve only run a light dust busting filter and deblocking algorithm over the film prior to input.

Disclaimer: It’s important to note that flesh tone generated by a computer is treading within a sensitive cultural realm. The “tones and hues” represented in this test are not meant to accurately represent flesh tone, but rather, provide a generalized colorization. As I point out in further detail in my results below, there is much work to do and I’m hoping that real-world testing like this will provide useful feedback for the humans that train the machines which then create the models.

I’ve been both curious and skeptical of the automated colorizing models available for video for the past four or five years. Colorized and frame interpolated archival enhancement videos have become viral hits on YouTube garnering tens of millions of views. This is fascinating considering the films were shot over a century ago (STILL GOT IT!!!) The topic has become a popular sub-genre ever since prosumer software like Topaz Video Enhance and Gigapixel AI were released a few years ago. All of a sudden, everyone started using AI to “upscale” their archival media not really understanding just how badly these tools were decimating the original detail of the archival. Admittedly, I was initially fascinated myself but after using it a few times, I realized it was throwing out a ton of detail and making people look like inter dimensional creatures made of plastic. Strangely, most of our audience these days don’t seem to notice the artifacts inherent with these processes. Peter Jackson’s recent series The Beatles: Get Back gave us a glimpse at just how much detail film studios are willing to throw out so long as AI tools give archival the appearance of looking “better”. HBO’s recent documentary George Carlin’s American Dream is a good example of just how far filmmakers can push the uncanny valley. But I digress…

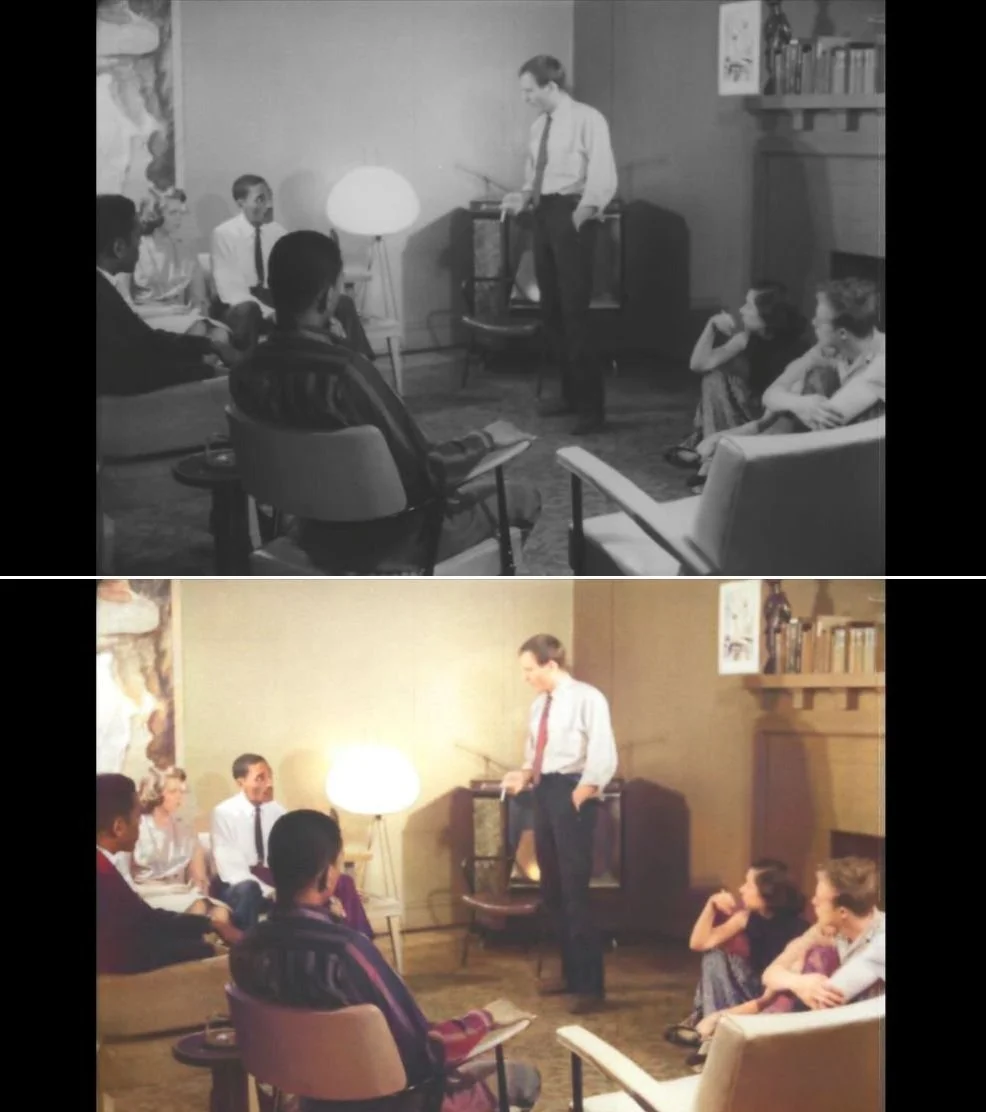

Colorizing models like DeOldify do seem to make an effort to paint flesh tone on faces and other exposed body parts to a reasonable degree, but the rest of the image elements take on a random assortment of muted hues. Ironically, the models impart the hand painted characteristics of colorized photos of yore. At first glance these AI models appear to colorize the image, however, upon closer inspection this assumption falls apart. In this article, I’ll show you an example of some tests that I’ve done recently using an archival film called The Cry of Jazz, which I’ve sourced from the Prelinger Archive. I’ve used this particular film for testing because it has a range of skin tones, it’s in the public domain, and the source quality is relatively decent. It’s certainly not a high quality scan of the original print but it hasn’t been encoded into oblivion.

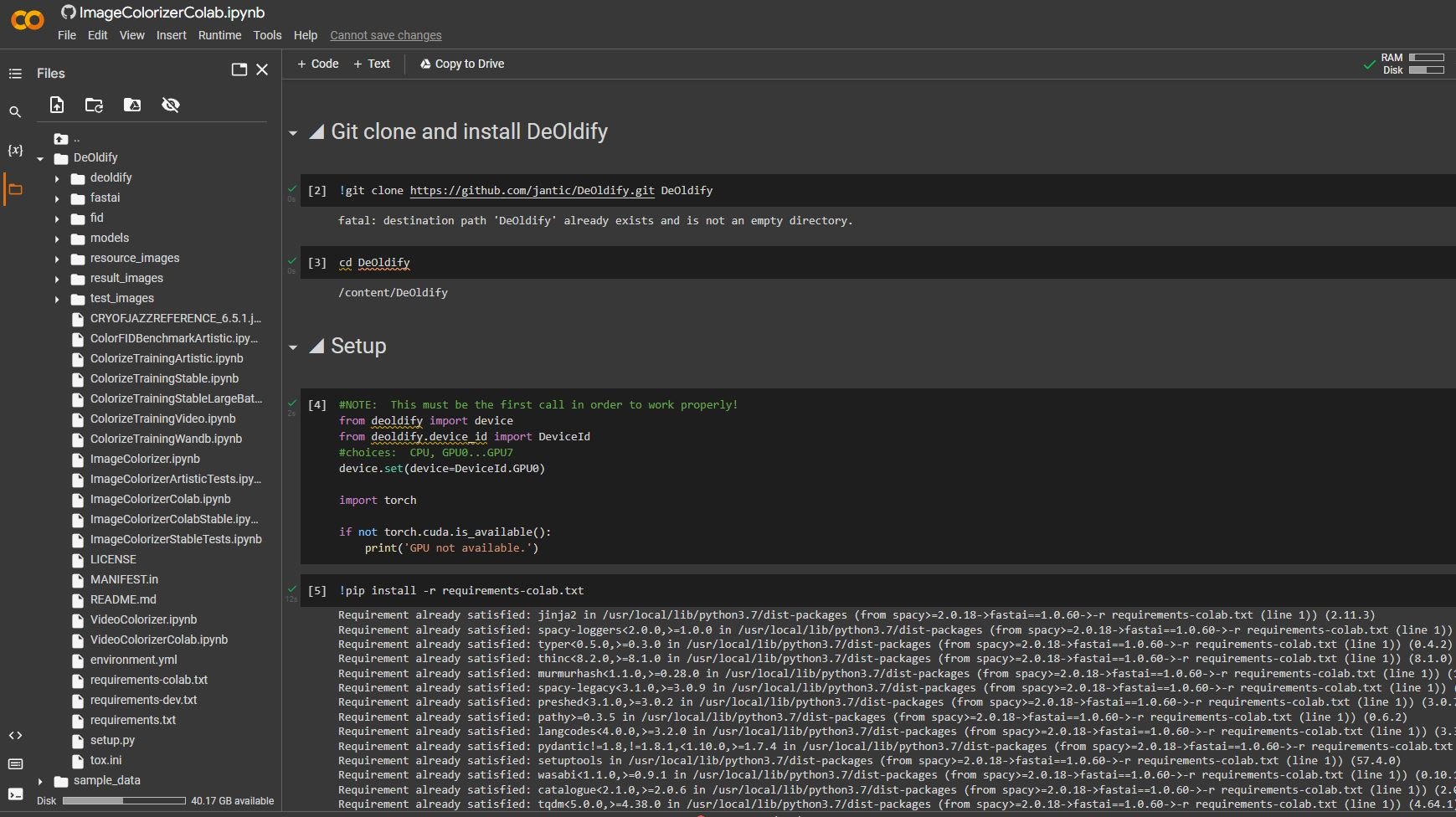

Google Colab

If you don’t have a powerful Linux or Windows machine to install and run virtual environments for models like DeOldify, you can simply login to your Google account and run a Colab session for free. Google Colab is a cloud-based computing platform available for public use which provides free access to powerful GPUs. Once you’ve logged into your google account, you can visit the DeOldify Colab and begin testing.

Source Monochromatic Images:

You’ll need to export a frame from your source archival video as a JPEG or PNG, then colorize it using the DeOldify model. You can run the model locally on your machine or you can run via Colab. These frames will be important for the video colorization process later. For this test, I exported JPEG images that were taken from the first frame of each video clip I want to colorize.

Colorized Still Images Using DeepRemaster:

*Note that the model colorized her pupils to a beautiful chestnut hue! Wow. Very cool detail. (though we have no idea what her eye color was in real life)

*Note that the model colorized the hardwood floor the color of wood. Very cool. But the model failed to colorize the woman’s right arm and hand. The man’s white shirt is perfectly clean with a neutral white point. Even the painting tacked to the wall has been given a bit of an artful colorization.

DeepRemaster

Once you have colorized your frame grabs, you can now use them as reference images for the corresponding video clips you’d like to colorize. You’ll need to upload them along with your archival video clip before you can run the DeepRemaster model in Colab.

DeepRemaster will now reference these colorized stills and apply a matching colorization to the corresponding video clip.

DeepRemaster Results

Needless to say, the initial results are stunning. We’ve certainly come a long way in the fast five years with this technology, however, DeepRemaster is a significant step forward. There are a few issues which seem to keep coming up and I’ve listed them below. I have not done any sort of quantitative testing to validate these observations.

RESTORATION

One issue I currently have with DeepRemaster is that the model also seems to be removing what it believes are unwanted artifacts. I would prefer to use my own methods to restore the image prior to colorization. I am, however, still learning to use the parameters of the model so this is something I might be able to address.

FLESH TONE

Darker skin tones don’t seem to be rendering as well as lighter skin tones. I’m not implying that this artifact is the result of the model not being trained with enough darker skin tones. This might be an issue with the model’s ability to segment and effectively colorize areas of the image with lower luminance values. Perhaps there is some sort of sweet spot for flesh tone exposure which I’m not taking advantage of. I have not color graded these source images before or after the fact. I’ve only cleaned up the dust and scratches a bit before colorizing. This might also simply be due to the specific nature of this test material. I need to run other media through the model to see if this is consistent.

MAGENTA SHADOWS

As I mention above, DeepRemaster has trouble rendering realistic shadows (not always but often). This becomes particularly problematic as the darker image areas have a magenta wash to them. You can see in the tests that the darker areas shift toward the line of purples and because of this, there is a global magenta feel to the results.

INCONSISTENT IMAGE SEGMENTATION

The model often does not recognize things like hands and legs when they take on abstract angles/perspectives. You’ll notice that somethings people’s faces and limbs drift in and out of color. I would not say that this is due to compression artifacts or large macroblocking as I did deblock and clean other compression artifacts out of the source image before testing. This is certainly one of the major issues with the current model since if there’s ever to be massive industry adoption for this technology, consistency will be paramount. The good news is that this is a popular field of research and the models keep improving. It will only be a matter of time before we get there.

COMPRESSED HIGHLIGHTS

You’ll notice that the highlights seem to be a bit hotter in the colorized material than in the source image. To be fair, this may be my fault. I need to play around with various input rendering transforms to see what it prefers. Was the model trained on archival? If so, was this archival’s color primaries and gamma kept at Rec.601 or was it transformed to Rec.709/Bt.1886? I need to read the documentation to find out a bit more. But I would prefer the model not make any gamma changes to my image if I’m going to add this methodology into my pipeline.