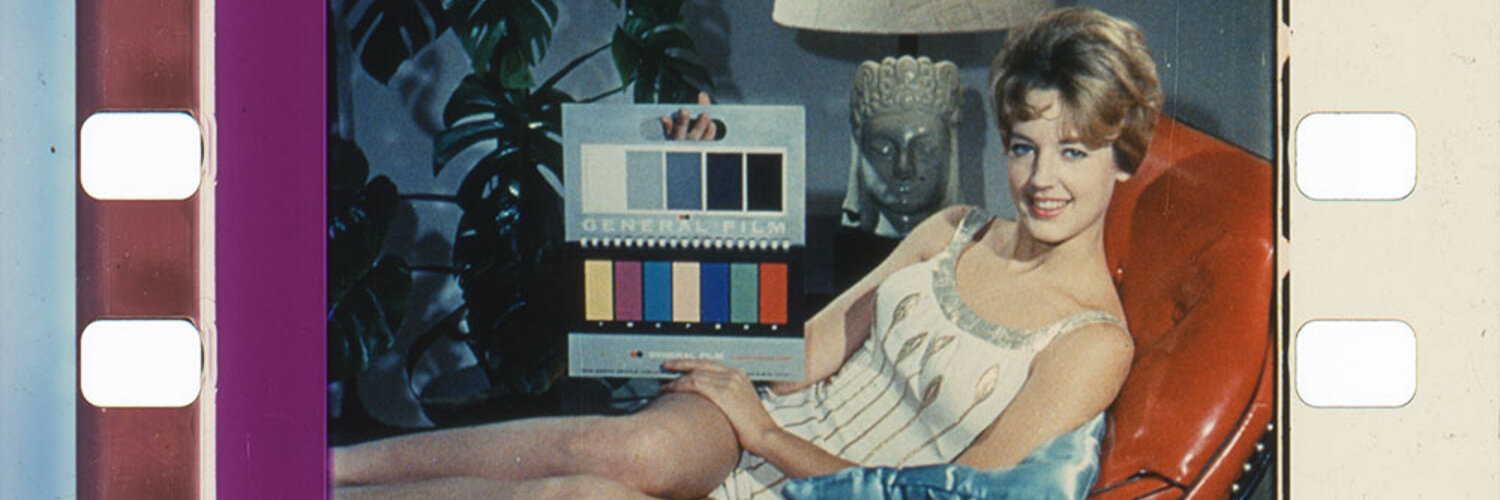

DeepRemaster will now reference these colorized stills and apply a matching colorization to the corresponding video clip.

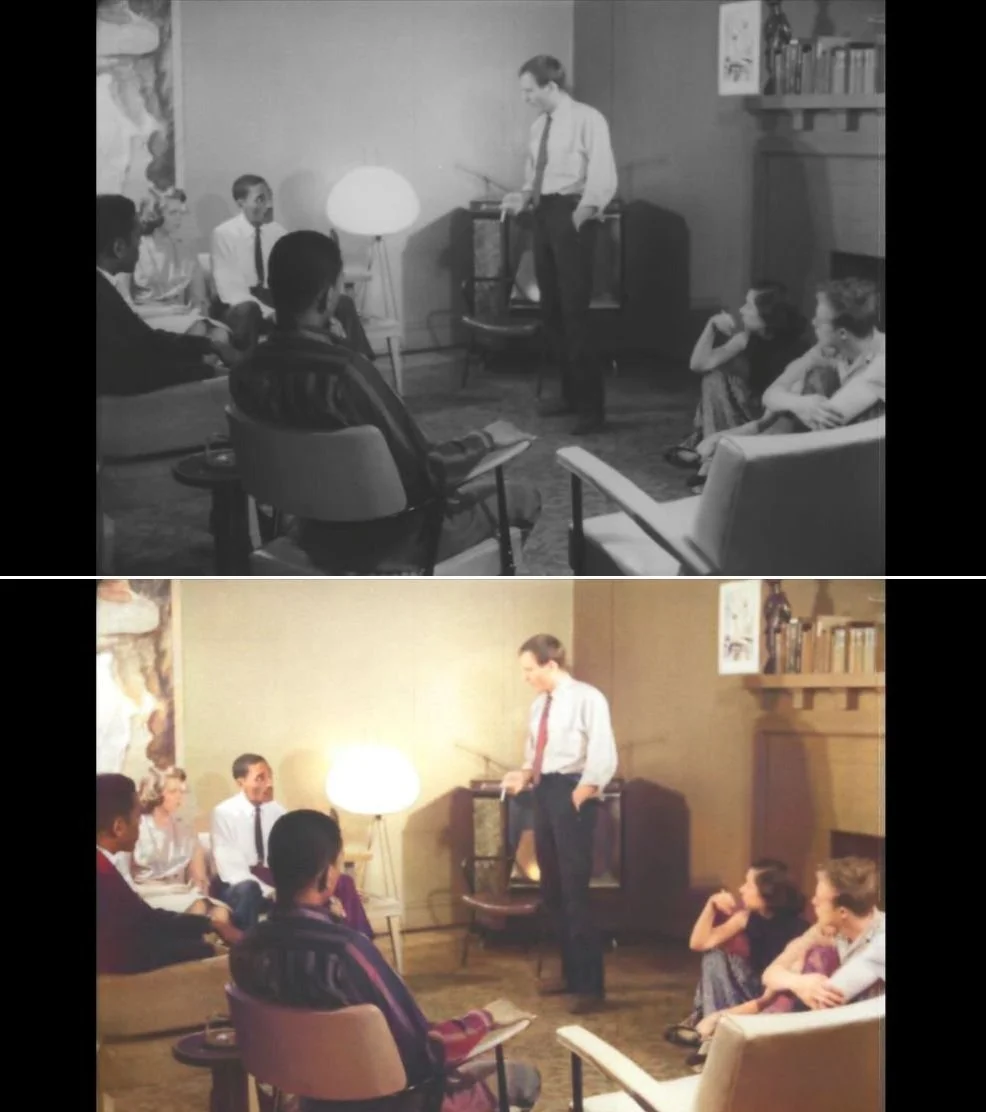

DeepRemaster Results

Needless to say, the initial results are stunning. We’ve certainly come a long way in the fast five years with this technology, however, DeepRemaster is a significant step forward. There are a few issues which seem to keep coming up and I’ve listed them below. I have not done any sort of quantitative testing to validate these observations.

RESTORATION

One issue I currently have with DeepRemaster is that the model also seems to be removing what it believes are unwanted artifacts. I would prefer to use my own methods to restore the image prior to colorization. I am, however, still learning to use the parameters of the model so this is something I might be able to address.

FLESH TONE

Darker skin tones don’t seem to be rendering as well as lighter skin tones. I’m not implying that this artifact is the result of the model not being trained with enough darker skin tones. This might be an issue with the model’s ability to segment and effectively colorize areas of the image with lower luminance values. Perhaps there is some sort of sweet spot for flesh tone exposure which I’m not taking advantage of. I have not color graded these source images before or after the fact. I’ve only cleaned up the dust and scratches a bit before colorizing. This might also simply be due to the specific nature of this test material. I need to run other media through the model to see if this is consistent.

MAGENTA SHADOWS

As I mention above, DeepRemaster has trouble rendering realistic shadows (not always but often). This becomes particularly problematic as the darker image areas have a magenta wash to them. You can see in the tests that the darker areas shift toward the line of purples and because of this, there is a global magenta feel to the results.

INCONSISTENT IMAGE SEGMENTATION

The model often does not recognize things like hands and legs when they take on abstract angles/perspectives. You’ll notice that somethings people’s faces and limbs drift in and out of color. I would not say that this is due to compression artifacts or large macroblocking as I did deblock and clean other compression artifacts out of the source image before testing. This is certainly one of the major issues with the current model since if there’s ever to be massive industry adoption for this technology, consistency will be paramount. The good news is that this is a popular field of research and the models keep improving. It will only be a matter of time before we get there.

COMPRESSED HIGHLIGHTS

You’ll notice that the highlights seem to be a bit hotter in the colorized material than in the source image. To be fair, this may be my fault. I need to play around with various input rendering transforms to see what it prefers. Was the model trained on archival? If so, was this archival’s color primaries and gamma kept at Rec.601 or was it transformed to Rec.709/Bt.1886? I need to read the documentation to find out a bit more. But I would prefer the model not make any gamma changes to my image if I’m going to add this methodology into my pipeline.